This winter, as Lancaster colleges celebrated yet another hard-earned end to their semesters and sent students home for the first leg of an icy winter, local educators had another anniversary to acknowledge: one year since the release of the highly controversial AI tool, ChatGPT.

With recent absurd headlines ranging from “Using artificial intelligence to talk to the dead” in The Seattle Times to “Imran Khan deploys AI clone to campaign from behind bars in Pakistan” in The Guardian, AI has proven its utility for better or worse, even as critics condemn its often devastating algorithmic bias.

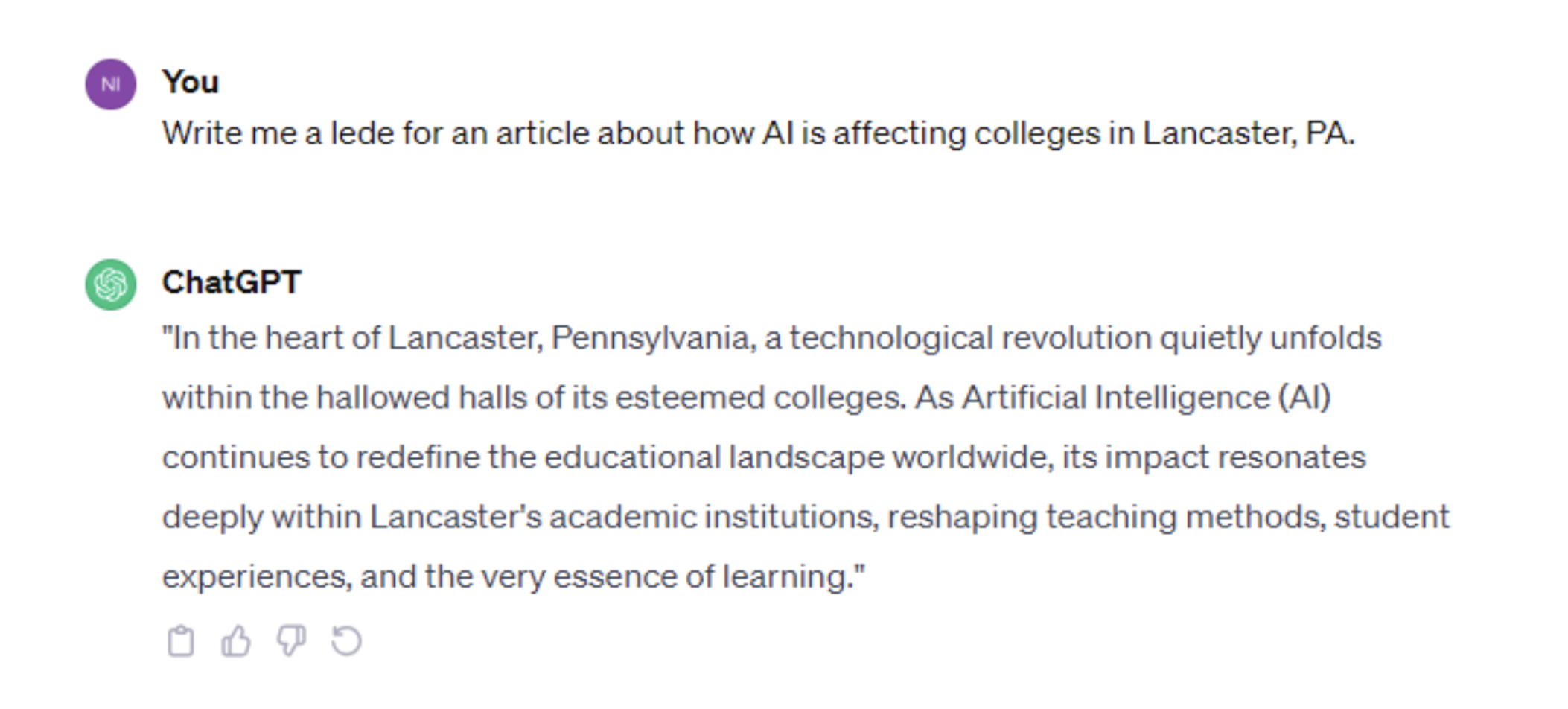

Among undergraduates, 2023 saw AI grow into an academic tool used for brainstorming, problem-solving, and—as students confirmed during last week’s Common Hour panel through a live anonymous survey—paper-writing. ChatGPT answers student math problems in seconds. With only a short prompt, it writes essays worthy of mediocre but certainly passing grades, even if its citations are wholly made-up. High school and college educators are forced to grapple with new rules for the classroom, whether to embrace or condemn AI, and what ‘embracing AI’ could possibly mean.

January’s final common hour was a campus discussion on “Generative AI” and its implications for the college. A panel of faculty, staff, and students led the conversation, steered by Kelly Miller of ITS.

“AI doesn’t know anything about you. Your hopes, your dreams, your career plan,” said Jennifer Redmann, Professor of German, in a long back and forth about the pros and cons of AI use, of how to incorporate it into a liberal arts education. “The importance of writing instruction is greater than ever.”

One year in, how is F&M holding up against robotic algorithms?

“Something we can learn.”

When the administration first learned of ChatGPT, the Committee on Student Conduct immediately reached out to Kelly Miller, F&M’s Senior Instructional Designer, for help. They needed to learn technology, fast.

Over the summer of 2023, Lee Franklin, Professor of Philosophy and Interim Director of the Faculty Center, and Miller planned the annual faculty symposium as an AI learning experience with workshops, data showcases, and the creation of a “robust” AI faculty resource site, according to Colette Shaw, Dean of Students. Brad McDonel, Assistant Professor of Computer Science, also serves on the conduct committee, bringing some AI expertise to the table.

“This is something we can learn,” Shaw said. This past weekend, beginning on February 1, the Association for Student Conduct Administration (ASCA) hosted its annual conference, where Shaw presented on how AI has affected student conduct at F&M. “I have a story to tell, and it’s not the story you think you’re going to hear.”

Academic Conduct

The first AI student conduct case occurred on March 31, 2023. After that, 10 of the 14 remaining academic misconduct cases of the semester were AI-related. While there weren’t an absurd number of cases, Shaw emphasized how quickly they accumulated, from the end of March to May.

In fall of 2023, the college jumped to 52 academic conduct cases, nearly double that of the previous fall (32) and two more than the previous spring (50). Half of these cases were based on suspicion of AI use. Initially, the Committee was “pretty freaked out,” according to Shaw.

“We learned, approached this with a lot of curiosity with students,” she said. “It’s not just this binary: you cheated or you didn’t.”

While some AI tools are blatant in their strategies (e.g. ChatGPT), other applications disguise themselves as simple spell checks or writing enhancers, like Grammarly, a self-described “Free AI Writing Assistance” program. Students rely on tech-based writing aids to improve their work, restructure a too-long sentence, or fix the grammar after translating a lengthy essay from one language to another. F&M’s twenty-percent international student population makes use of this aid nearly inevitable, according to Shaw, despite the existence of campus support systems like the Writing Center or the International and Multilingual Support Center.

Shaw emphasized a need to ask students about AI use rather than assume the worst, acknowledging that the technology is new and administration’s ability to recognize AI-generated essays even newer. She said that students almost always provided explanations for their behavior, when asked. A minority of students refused to admit AI use when confronted.

“Some were curious about it, some were time-crunched,” said Shaw. “It’s academic strategies, panic. A lot is about confidence. I really wanted to give my professor something good.”

When a student is called in for a disciplinary hearing for AI, faculty are strongly encouraged to attend. Every student has a personal writing style, and their professors know them best, as distinguished from what Shaw refers to as the voice of “a happy little robot.” While Shaw did not disclose the exact disciplinary status of AI cases from the fall, she noted that the AI case record was almost certainly proportional to the overall academic misconduct distribution: 21 reprimands, a few probations, and 6 warnings.

While overall suspension numbers were not shared, Shaw confirmed that no one has been suspended for AI use.

“We’re nice,” said Shaw. “But we do say, we can never be back here again.”

There have not been any repeat offenders for AI conduct cases.

Is F&M running behind?

While F&M has largely approached AI from behind-the-scenes, Millersville University took a slightly different approach. After the launch of ChatGPT, Marc Tomljanovich, the current head of Millersville’s AI Task Force and dean of the Lombardo College of Business, met with the provost. They launched the committee in January 2023.

“It was very, very apparent to me at the very beginning just how transformational it is. I can’t imagine a single industry unaffected by this,” said Tomljanovich. “I’ve been teaching for 20 years… How can we start weaving it into our curriculum?”

The committee has been responsible for two large changes at the university: hosting a series of educational workshops on AI use and updating the school’s academic honesty policy to reflect modern times. The policy hadn’t been substantially revised since 2007, allowing current faculty to add new phrases to the text about cell phones, hacking, online repositories (e.g. Chegg), and AI. F&M reviews its academic honesty policy annually, though it is unclear when it was last revised.

Despite these changes, Tomljanovich admits that “there’s no real way to tell” whether a student has used AI. Even long-loved programs like TurnItIn.com garner too many false positives. While unaware of any uptick in academic conduct cases due to AI, commenting that it’s usually “nipped in the bud by professors,” Tomljanovich said that “around ten faculty” reached out to the task force “thinking students might be using it” during finals season last spring.

AI is complicated, difficult to recognize (sometimes), and even more difficult to penalize. Academic landscapes continue to shift toward alignment with new technologies, blending auto-generated content with… whatever it is that makes us people.

“I found myself saying that in a really rapid time of technological change,” said Jeff Nesteruk, Professor of Legal Studies and Future of Work expert, at the end-of-January Common Hour. “We need to ask what it is that’s distinctively human that we bring to our labors.”

Regardless of where AI is headed, F&M has 2000 students that make up its creative foundation. How the college decides to pursue a rapidly progressing fusion of human academia and smart technology has yet to be solidified but will be determined by administration, the faculty serving on these conduct committees, and the students who show up to campus-wide talks.

“It’s crazy what you can do,” said Tomljanovich in awe. “In some ways, you’re only limited by your imagination.”

Senior Sarah Nicell is a Staff Writer for The College Reporter. Their email is snicell@fandm.edu.